MaaS (Metric As A Service)

How to set up a team habit of looking at the metrics and connecting it with the product success

Introduction

There is no question that making decisions based on data is one of the key tenant of product management. Having data at hand about product usage is like shining a flashlight into the darkness.

Although what data exposes could be rewarding - growth heading towards the upper-right or retention curve flattens at a certain level - and other times could be disheartening, because the numbers are going into the opposite direction you wished.

It’s a humbling and eye-opening exercise, specially when you can share it with the entire team. This is something I’ve been striving for in the last year for the product we have built and I would like to share some tricks and bruises I got from my experience of trying to be a bit more data-driven.

Main Learnings

Data thinking stars way before you deploy your product

Although most of the product people will tell you in conversations, that you must measure the usage of your product and the success criteria before you start even building anything; the reality is sometimes different.

One of the first things that create the sense of thinking in data / metrics is the success criteria of the feature or product you’re implementing. How is the team going to know whether the product or feature is successful or nor? It could be either quantitative or qualitative. Regardless the specific metric you choose, that is a forcing function of thinking how you are going to measure that.

You would be surprise, how many teams defer this until they are about to deploy the feature or product into production, and they have to rush off to implement something to obtain the data representation of what they have defined as success.

One trick I’ve used is for every single feature or product we add into the roadmap, always add the success criteria, without any measurement nor data, to anchor ourselves that we will have to discuss at some point how to know whether we succeed or not.

Data should be a mean to an end

Measuring and obtaining data is not the end itself. It’s a compass that orients the team to know whether you are heading into the right direction, and correct the course if necessary.

The first thing that I to kick-off our efforts to be more data-driven, after adding the success criteria to every feature or product, was to expose to the entire team the journey I had mapped in Pendo (product tracking tool).

Knowing that most of the engineers are curious by nature, I presented the situation that the flow we had in our minds when we had designed the feature, was used in a completely different way by our customer.

The initial reaction from engineers is to rationalise the customer behaviour by saying. “The customer doesn’t know ….”, “I know we don’t represent Y in the right way”. This is what you want at first. Getting this level of discomfort, so they start explaining why that happens.

Then it comes the part where you ask them to defer their thoughts, to avoid bias the rest, and silently jot them down to explain what they thing.

What I’m trying to convey here is that, we don’t have the meeting to explain the data or why the customer click on X or Y, but to validate what is going know why the usage of the product. The way you frame this sessions is half of the job.

Start where you are now

It is easy to get bogged down into the perfection trap and try to measure every single data point. We tend to think that more is better.

Conversely, if you are just starting, less is more. The main reason behind this is, as you start sharing data, the reaction is to ask questions. You reach a dead-end when you don’t have the data to know more, therefore you are immediately drawn in understand what you need to answer that. It’s like a reinforcing cycle.

To our surprise, we started in this order:

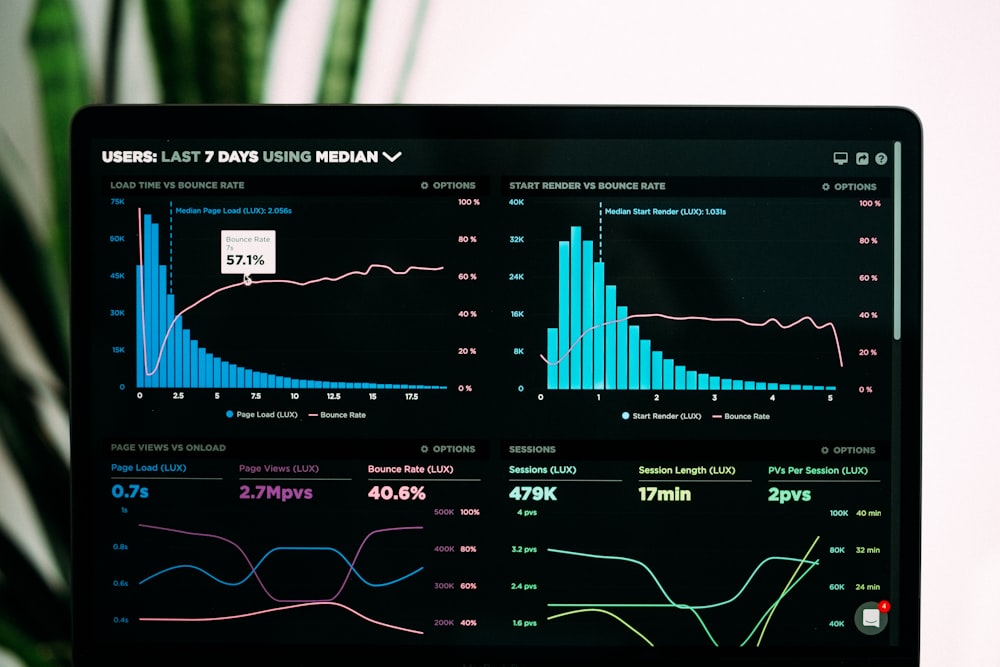

Created a dashboard to understand whether our product was successful or not

Later, we tracked the actions the customer was doing with our product.

Later, we started to collect information about our performance to ensure we were providing the expected SLA / SLI / SLO (even this step took us a couple of months)

Now, we combined the different data sources to generate a comprehensive understanding of the product and solution (this is done in weekly meeting).

Now, we wanted to combine the units of economics to know how our product is contributing to the overall business outcomes.

As you can see, we couldn’t have done all of the above in one shot. Be patience and measure what matters first, and combine what you need to get a better understanding of how your product is used.

Data is a team activity

As you can probably infer from the previous points, I was the first attempting to answer questions using data. I don’t know if I feel proud of it, but some interactions with C-level exposes how much they care about numbers and data specifically.

My mission as a PM, at some extent, is to cultivate the importance of using data to discover how the product is being used, make decision to invest in some areas, and many more.

Unless your team has had some experience in the past, you need to nurture the ability of using data as an input for know what is going on and see the effect some actions are having on the product.

One indicator of success is that engineers start to ask questions like “what are the metrics that will tells us if the customer is doing X?”, “do we need to measure the performance of this component”, “what telemetry will give us confidence to keep investing here?”.

The second side of the same coin is, as you progress in your experience with metrics, you must start connecting the dots of the inputs, outputs, product outcomes, customer outcomes, and business impact. This way you have the entire picture of:

attributes that could render your product better

the performance of the output you’re creating

the product / customer outcomes to see if customers are performing the action you expect them to perform and if they are making progress

how you are contributing to the success of the business (adding new revenue, preserving revenue, or improving margin costs).

Show progress not perfection

We haven’t started collecting hundreds of data points at once. One of the biggest AHA moments for us was when we have shown on a Product Review how a change we discovered during our metric review meetings, has reduced 50% the number of errors that our customers had using our product.

We had a great congratulations from the entire leadership team, but also had the reinforcing effect of making sure we were making progress towards improving the product and the customer experience.

Sometimes showing progress is the easiest thing you could do, rather than measuring millions of things and not getting progress.

We now look at the data points to see how our product is performing AND also coming up with hypothesis that could be converted in test / experiments we can implement later to keep improving the product.

Ask and give help

One of my first jobs at Nexthink was to participate in the decision of which Product Analytics tool. We ended up deciding for Pendo, but the main takeaway from that experience was to work side by side with someone who has much more experience in the data field.

We came up with the taxonomy for how to tag the different events in our product, and also came up with a series or questions that different teams could use to start implementing the telemetry in basic ways.

Don’t hesitate to reach out to other people or teams who may be more proficient in this topic. Same thing when you have a lot of knowledge by digging and navigating data and tools, even if it is to get teams to ask themselves better questions to come up with the best measurement and data for their product.

Data can be daunting at first, but when you know how much benefit you can get by understanding it, you will improve your product intuition and render your product better for customers.

For more information, please read this wonderful article from Joulee Zhuo