Recently I had the chance to give a lightning talk for the GenD (the data, design, and digital school I collaborate with) about a topic related to product management. I chose the idea behind identifying, prioritising, and testing assumptions.

This is a topic that is near and dear to my heart because I’ve suffered the pain of not doing it whatsoever or not doing it enough. IMO it is one of the most undervalued topics in the industry, and responsible of epic failures, time being thrown away, and many disappointments.

During the talk, I’ve covered the following: Why, When, Who, What, How and How much.

Let’s dive in…

Why

This quote highlights, in a funny way, why assuming is a bad idea. There two examples I can bring to exemplify the quote are quite obvious:

In 1999, NASA embarked in a project to send an orbiter to Mars in collaboration with Lockheed Martin. As you can read in the article, the thing didn’t go very well. Both partners assumed that their counterpart would use the same metric system. It turned out not to be the case, and $125 million were lost.

If you want a more first-hand experience about failures related to not identifying and testing assumptions, ask the author of this article. One of the first features as a PM of integrations at Nexthink, was a connector that was supposed to be “the next big thing” in terms of ServiceNow CMDB capabilities. It was nothing like that. We have never been able to get customers to adopt that connector and later on we decided to retire it from the ServiceNow platform.

When

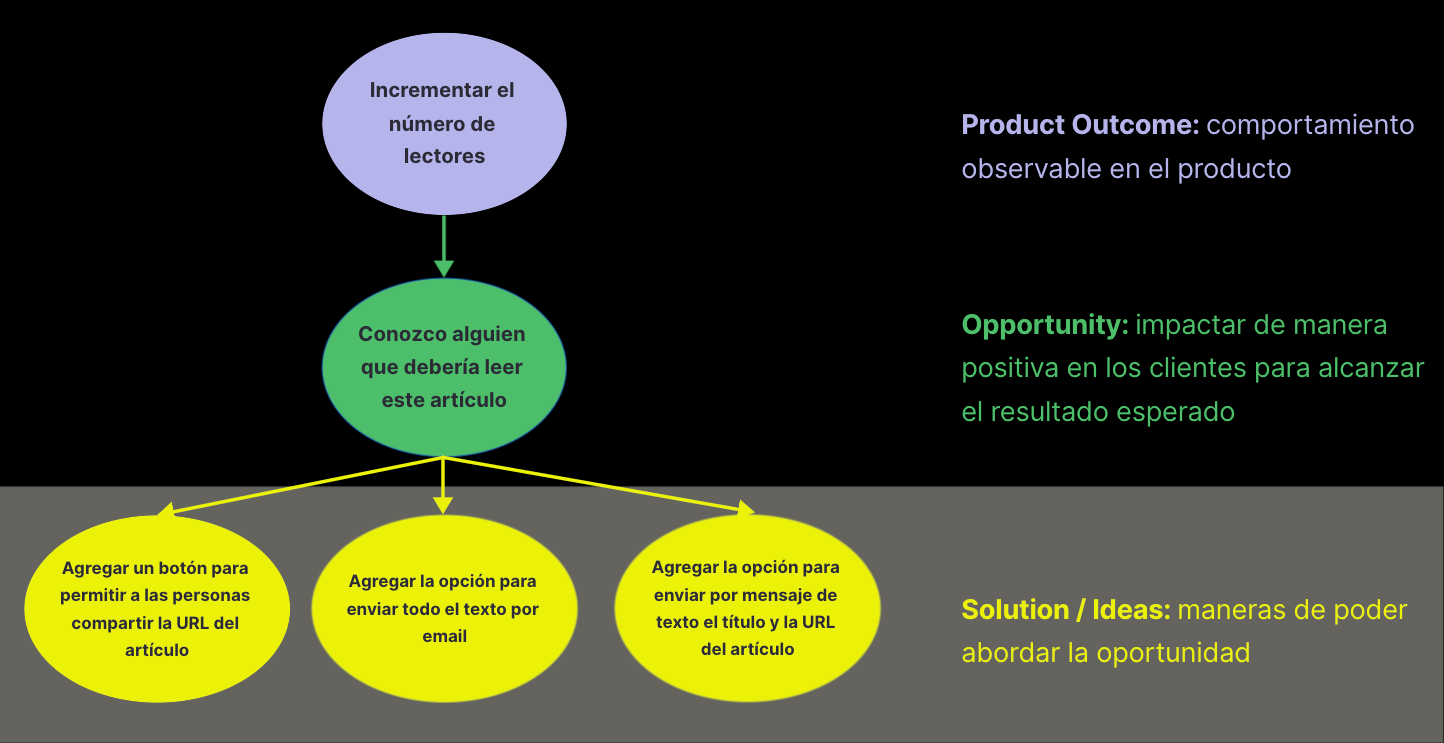

Being able to identify, prioritise, and test assumptions and risks is mainly done when you are doing product discovery, especially when you must decide what to build. If you are familiar with the Opportunity-Solution tree from Teresa Torres, I’d draw it as follows:

As Teresa states in one of their articles:

Assumption testing helps us compare and contrast multiple solutions against each other. So I like to run assumption tests after I’ve chosen a target opportunity and selected three potential ideas to explore.

Nonetheless, when you are in the delivery phase defining what the scope of the first release is going to be, I like to push engineers to expose the risks we might have not considered in discovery (since we haven’t built the entire solution). There are always topics that are emerging as part of this that are also relevant.

Who

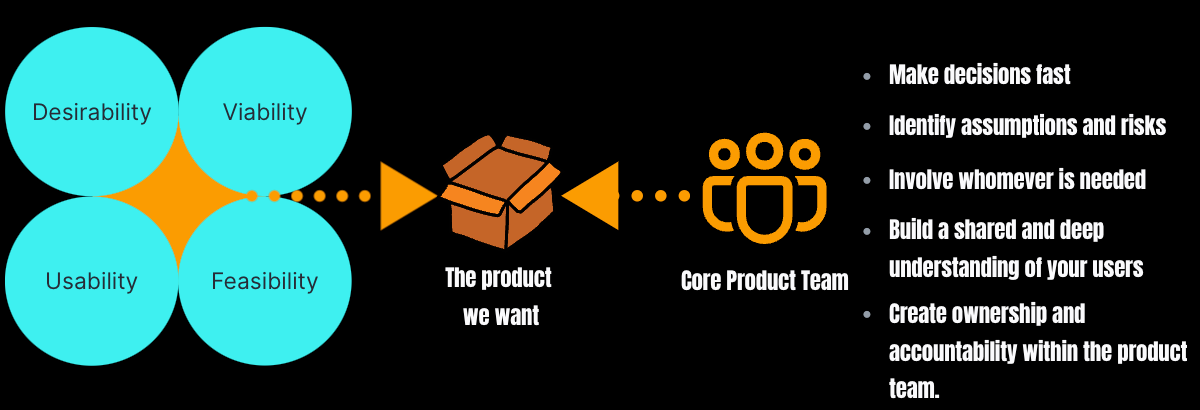

People could assume that most of the work should be done by the PM. But as we know from a great book and many talks delivered, we must consider assumptions and risk that are falling the Desirability, Usability, Viability, and Feasibility.

There is no way that only one person could cover all of these and being comprehensive at the same time. Therefore we should consider who are the people that should be included to have as much coverage as possible on each of these dimensions. For every product, the team could look like differently from one another. Sometimes you will have two (as I did in my early days at Nexthink), sometimes you will be four (as later on at Nexthink), and other times three.

Quantity doesn’t guarantee the outcome of exposing as many assumptions and risks as possible, but the people who have the skills required.

What

Exposing assumptions and risks is the first step, but the work doesn’t stop there. If you only identify, it’s like gathering all the ingredients for making a great fillet mignon, but they will rot if you don’t mix them up and cook them.

The same happens with assumptions and risk. Along with identifying, you must prioritise and test them to know whether the truth that you imagine in your head is the right one, and the risks are not going to kill your idea.

How

For identifying, I love using Story Map and pre-mortem. Below some of the references I used in the past to facilitate this dynamics: Story Mapping, Pre-mortem @Stripe, and Pre-mortem by Giff Constable

For prioritising, the tool to go is the assumption mapping from David Bland and the 2x2 matrix created by Jeff Patton and Jeff Gothelf:

For validating, you can use either the following techniques suggested by Teresa Torres (Prototype Tests, Questions Surveys, Data Mining, and Research Spike), or the wide variety of techniques that are available y in the book Testing Business Ideas by David J. Bland and Alexander Osterwalder.

How much

One of the biggest questions that is asked in conferences and to the experts I’ve mentioned before, is “When do I know when to stop testing ideas?”, which can be mirrored to the one of “When do I know when to stop validating assumptions and risks?”.

I wish I had a formula that would tell you the exact steps you must execute, how many assumptions and hypothesis you need to test, and of course when to stop. Off the bat I can tell you that most of the times you won’t have 100% certainty on when to stop.

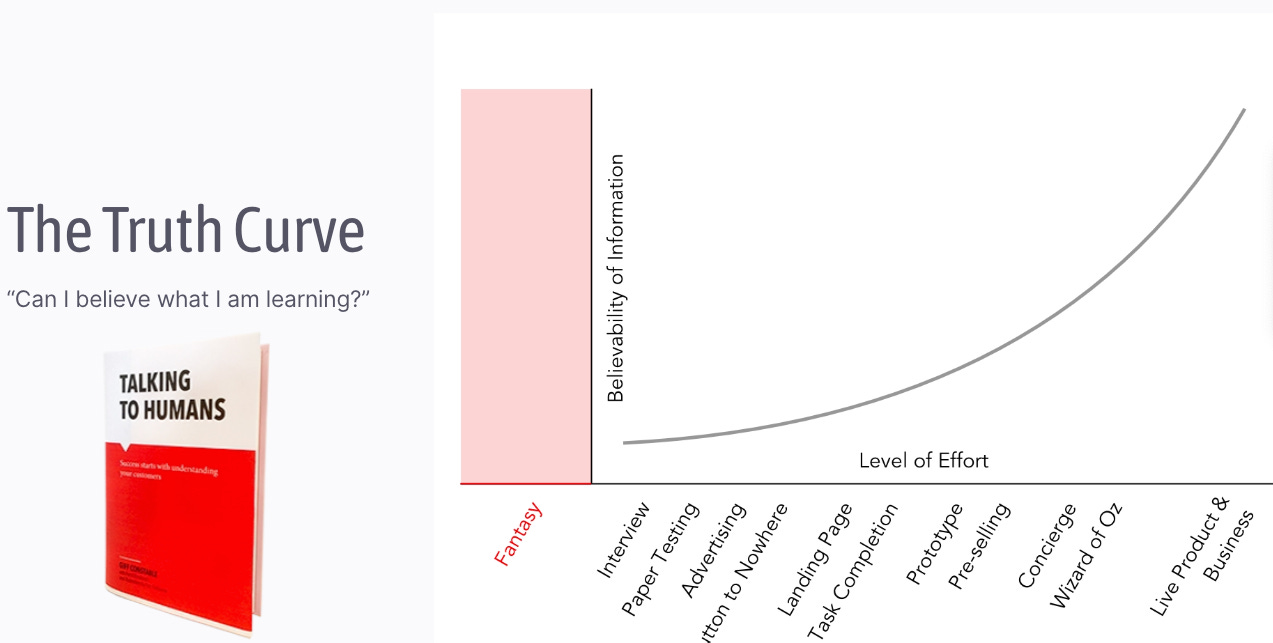

I love using a concept that is introduced in the book Talking to Humans from Giff Constable which is call the truth curve:

The idea is quite simple. When you are debating ideas and what to build without any sort of feedback and contact with customers or users, you live in the fantasy world (red area). However, as Giff explains:

However, real truth is in the upper right: your product is live and people are buying or not buying, using or not using, retaining or not retaining. Your data is your proof. However, you can’t wait that long to test your ideas or you will make too many mistakes, waste too much time, and have needlessly costly failures.

I have to say that I’ve used this concept a handful of times, and it always helped me to understand up to what point we should keep investing in learning. Here are some examples from my time at Nexthink:

Feature Enhancement:

Brand-new Feature:

Brand-new Product:

As you can see, depending on the thing you want to validate, the level of effort you want to invest. This chart keeps me honest to know that in order to create a new feature, I can obtain the confidence I need in less time than building it directly and spending twice the time.

Conclusions

Never assume, because you know what are the consequences

Identifying assumptions and risk is necessary, but not sufficient.

Use Story mapping or pre-mortem to expose most both assumptions and risks

Be mindful of the effort you want to invest based on the information you are going to gain.